Abstinence is a word that frequently applies to me. Alcohol, coffee, cigarettes, drugs, Facebook, Twitter, Insta, TikTok, Bitcoin, stocks, email on smart phone, and lots more: not for me, thanks. I also semi-abstain from various energy/resource-heavy activities. Part of this tendency may have been shaped by the Raiders of the Lost Ark movie, where characters Indiana Jones and Marion are tied to a post in the rear of a crowd waiting to see the marvels inside the Ark of the Covenant. Indy had the instinct to shut his eyes, and advised Marion to do the same, which spared them and only them. Just because everybody else is doing it is not reason enough.

Abstinence is a word that frequently applies to me. Alcohol, coffee, cigarettes, drugs, Facebook, Twitter, Insta, TikTok, Bitcoin, stocks, email on smart phone, and lots more: not for me, thanks. I also semi-abstain from various energy/resource-heavy activities. Part of this tendency may have been shaped by the Raiders of the Lost Ark movie, where characters Indiana Jones and Marion are tied to a post in the rear of a crowd waiting to see the marvels inside the Ark of the Covenant. Indy had the instinct to shut his eyes, and advised Marion to do the same, which spared them and only them. Just because everybody else is doing it is not reason enough.

In this light, I am practically ashamed to admit to using AI for image creation. It’s not like me. On the text-generation side (chat-bots), while I think it’s a pretty neat stunt they’ve pulled off, I am deeply unimpressed by the milquetoast blather that comes out of ChatGPT and its ilk when asked hard questions. When students tried to use AI to submit answers to questions, I could spot the style from across the room (based on simple formatting). It is not too surprising that nothing remarkable comes out, since the chat-bots are designed to construct sentences very much in the style of what it’s parsed in all its input data. They aren’t producing original thoughts, but function more as an averaging content generator.

But images: I’m more impressed—in that it’s more mysterious to me how it works. The parameter space is much larger than for regimented language. Even so, I think it’s actually somewhat similar to language in that images also have structure, and certain things tend to be close to certain other things, as is true for words as well.

When selecting a copyright-free header image for a Do the Math post, I usually have an idea for what I want it to show, and first look for pre-made content. If I can’t find something via a few channels, I now turn to AI, using the Bing interface to Dall-E. Admittedly, I’m no expert at speaking AI, but I still marvel at how inept it can be in forming an image that a 4-year-old would have little trouble picturing if using the same prompt language.

I was planning to do a post illustrating my failures over time, but was dismayed to learn that my past creations have disappeared on the Bing platform (not unique to me). What’s worse, I no longer have prompt language for the ones I kept. The EXIF information in the JPEG files lack any such prompt text. Why is it that the world is still being designed by unpaid interns?

So, this post isn’t all that I hoped it would be, given that loss. But, as with many things in life, I’ll work with what I have.

I’ll go in chronological order according to my personal exposure to AI image capabilities. My chief problem seems to be that I want my images to tell a story, which involves relationships, and this is the main feature AI seems to lack. Since I no longer have the prompts for the early work, the more complete lessons come later in the post, relating to more recent efforts.

A word up front about my prompt choices. I often specify a man, not for sexist reasons but because I am often making fun of the man-human. When I look to assign blame and ridicule, it is more likely to land on a man.

Snail on Butt

I was preparing a colloquium (recorded here) and wanted an image to illustrate an example. I wanted hunter-gatherer-looking people walking along a trail, with a snail crawling up the butt of the person in front, while the ones behind pointed and laughed. I was not yet hooked up with AI, but turned to a friend who had by that time produced many works on various platforms. The process was literally like a game of telephone, as I texted him concepts that I had to revise as the failures piled up.

The original instructions I gave my friend: “Hunter-gatherers walking along a path; the one in front has a large snail or some critter stuck to his butt or back that the others put there for fun, and are now laughing their asses off behind him in amusement.” This example may seem very random, but something like it happened to me while scuba diving decades ago: friends had stuck a sea star to my neoprene butt (worked like velcro), and were laughing a prodigious column of bubbles. Once I caught on, I made my own unbroken stream of bubbles.

Anyway, I can’t show the first two versions, because my friend for some reason decided to prompt using the word “cavemen,” which produced cartoon-ish representations that seemed a touch racist. But as we iterated, this image popped out.

It’s got the forest. The people seem to be laughing, alright. They look militia-like (seem to be armed). A little creepy. We gave up, but my friend also shared this four-panel of the snail theme that he had produced and discarded along the way.

Holy crap, that’s some twisted $#!+. “Hey, I’m going backpacking with my new giant snail friend. Want to come along? At what pace?”

Learning to Walk Again

For the Learning to Walk Again post, I wanted an image of a person or persons reaching shore after a shipwreck. Here are the creations (I no longer have the prompts).

This was okay. Pretty impressive in a number of respects (depth of field, among them). I could not have generated anything nearly as visually rich on my own. The face and hands are a little distorted, but that comes with the territory in AI imagery. But I decided to try again.

Erm. Sort-of. Strange body proportions (low knees?), but it could do. I decided to go with a group of people instead.

Faces are distorted/pinched, legs can be strange, and one is either walking back into the water or has a very hairy face. Unclear what’s happening with the left arms of the two at front right, and as is often the case, don’t look too closely at fingers. Still, I went with this one. Perfectionism and AI art don’t pair well, I find.

Giant Newt

I wanted to emphasize that nature is “bigger” than people. So I asked for a friendly newt that was very large compared to people. AI can do this sort of thing relatively well: objects, with minimal relationship/story. I got these two:

I went with the second, because I liked the community feel, and also that the newt looked friendlier—less likely to suddenly snap up a child.

Deer in the Headlights

For my post on a deer in the headlights, I had a specific arrangement in mind that was important to the narrative. See how hard it is for your own brain to build this picture. We’re looking at a narrow two-lane road with no shoulders and either guard rails or steep bluffs on either side so that swerving out of the two lanes is not possible. A car approaches from the distance and another is heading the other direction away from the foreground. A deer stands in the middle of the lane in front of the foreground car.

I messed around with this for many frustrating iterations, constantly getting garbage that ignored my intent as expressed in the prompts. I tried to reproduce some of this progression here, and the results are similarly baffling (to me).

When I use the prompt: “two lane road narrow no shoulders guard rail two cars approaching each other one deer in right lane” I get the following four outputs (Bing packages four variants on each attempt for consideration):

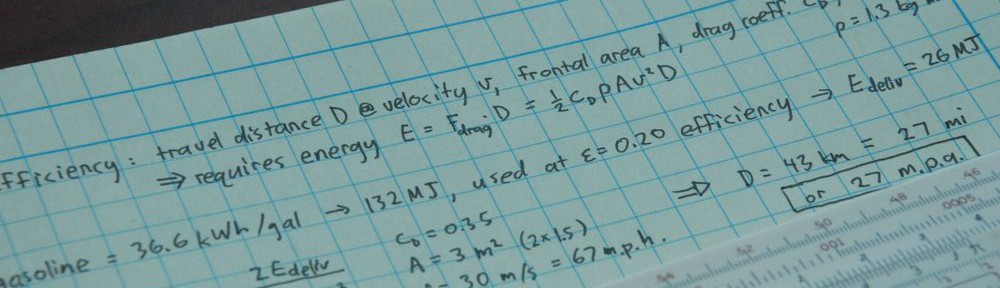

Three deer! One distant car. Unintelligible road sign. The trees are pleasant. The distant haze is a nice touch. The guard rail is realistically wavy. At least the road has a double yellow line (sometimes saw triple), but a long, straight section of road like this would likely allow passing (AI lacks such contextual reasoning).

What? Two cars in parallel (no passing zone, but again: no context); distant car is straddling the lanes (is everyone intoxicated?). Two deer in each lane: great—a total failure to correctly interpret the prompt. I love the signs: Quaral (a serious but underappreciated danger!) and De’Rail (also to be avoided). I get a kick out of the fact that the signs on both sides face the same direction, counter to how actual road signs tend to be deployed. Strange dash patterns appear in and around the center line.

Similar issues in terms of car placement, and a bit of a confusing jumble of cars in the distance. The centered deer will likely be alright in this case. It consistently gets trees and road/perspective looking decent: lots of images like this in the training set, I imagine.

Okay; at least traffic patterns make a little more sense, although the cars to the side are a little disconcerting. Road signs face one way. No call for no-passing. The deer is safe. Shadows are a nice touch, except for the missing croquet mallet in front of the deer that makes a shadow. Lovely country.

But all told, this is a failure. I could not use any of these to tell my story. So let’s try to nail down the prompt a little better: “two lane road narrow no shoulders guard rail two cars approaching each other, one in each direction, one deer in right lane in front of car”

Fail on counting cars and deer, and their respective arrangements. The signs again both point at the viewer, warning of conflicting curves that are not at all apparent in this gun-barrel-straight road. Hard to see how the one deer at left is still standing. The receding car makes glares from headlights (taillights?) that are not to be seen. Mirror-image side fields each show a single segment of wire connecting the light to a tree, as one does. Not usable.

Woah! Control yourselves! All cars heading one way; deer in middle. The cars must have some hellacious reverse lights beaming onto the road. I like the distant scenery and clouds, though.

Another failure on car and deer placement. Lovely mountains. The viewer-facing signs never fail to amuse. DEAP!

More close calls, again missing the intended arrangement completely. It’s hard to know how the oppositely-directed cars on the right would have looked a second before (overlapping cars?). More forward-facing-only signs. The car on the left appears to cast two shadows in different directions (a two-star planet?). I love how the yellow line is afraid to paint itself over the shadow, and wonder if that gap moves along with the car. The deer on the right is caught mid-jive.

Zero for eight, which was typical of this exercise. I finally went with the one below, as it’s semi-realistic, even if it does not show the second car on the right.

It could be that the AI engine is instructed not to show gruesome or dangerous scenarios, thus avoiding putting a deer directly in front of an approaching car (although sometimes it did so). In any case, that was the whole point of the thought experiment.

Cutting off the Branch

This is another one I had great difficulty navigating. I wanted to depict a person standing on a branch, sawing it off with a chainsaw between the trunk and the sawing person’s feet. You instantly understand the intended scene, right? My original attempts failed at every turn, no matter how specific I got. Sometimes it made the foot into a chainsaw. Sometimes it was indescribably bizarre. I tried again recently (having lost the original failures) and was blocked based on the nature of my prompt: “cutting off branch that man is standing on with chainsaw between feet and trunk“—too dangerous?

So I just tried again using: “man standing on branch, sawing it off between feet and trunk“—this time leaving chainsaw out in case that is a dangerous trigger word. All four image results featured a chainsaw:

At least this looks ill-advised, although the previous cut (inexplicably glowing) seems to be the main problem. Why hasn’t it fallen yet?

Okay, this guy is at least standing on a branch (downward sloping?) with the chainsaw, but has chosen a frontal assault on the trunk that is unlikely to accomplish much. Newbie.

This guy has one of those splintering chainsaws, apparently. Lots of dazzling light, too. The saw is somehow only toothed on one side. The tree is sideways, and the ladder is pretty sketch. The current cutting strategy does not meet the criteria and is no more dangerous than the ladder.

I have no idea what this guy got himself into. And did his hand shave off the wood? It’s just confusing, but not what I had in mind. Another unusable result.

Then I tried: “man standing on branch with chainsaw, sawing the branch between feet and trunk“—no safety blocks.

Hey, is that the same fake guy as before? Dude! Watch your foot! Yes, this looks dangerous, but fails to illustrate the central point of sawing off the branch that at least he’s standing on—except the interface between branch and tree looks off. Lots of odd little cuts here and there, and a carefully shaved branch top. This guy has no idea what he’s doing.

The same dude’s brother? He’s apparently annoyed by the bulgy imperfections on this branch (is it a branch?) and is intent on shaving it smooth. I guess I have little objection, but it fails to illustrate the point.

This is a “real” man from before color. Workin’ hard. Time for a cold one! I am left with little doubt that this cat knows what he’s doing and doesn’t need me kibitzing. Not in clear immediate danger.

Aside from the possible snap when the weight comes off, this cut is not obviously bad news—even if I wouldn’t try it. Similar chainsaw model as two back. Again it failed to deliver on the main idea.

The image below is the one I eventually had to use. It was the closest out of several dozen images to conveying the point I wanted to make. Very disappointing.

Oblivious to Danger

This one delivered a decent image on the first try, used in The Intransigence of Now. Sadly, I lost the prompt, but it would have had to do with eating at a table, oblivious to a tornado outside the window. It works sometimes. Just don’t ask how he’s holding the burger or what the fork is accomplishing.

Kid Asking Why

For a post asking why we pursue the things we do, I wanted a kid pestering an adult paying attention to other things, with the kid asking: why? why? why? I have lost the original prompt and results, but I attempted repeat, using: “kid pestering adult who is reading paper, asking why? why? why?”

Here is a typical result:

The angry kid appears to be shouting, not asking why—and the speech cloud originates from the silent and grumpy adult (who works sitting on the floor like most businessmen). In one of the variants, the speech cloud unambiguously emerged from the adult’s shoulder. In another, it was the newspaper’s large headline that asked WHY?. I ended up going with the following photo-realistic scene that missed the “why” but still communicated the mood (by accident, I am convinced, based on the others in the set).

Prairie Scene

For the post on A Story of Mice (and Men), I wanted a natural prairie with flowers and grasses, featuring a single silo. I have lost most of the prompts and failures, but had a devil of a time getting just one silo. They often came in pairs and six-packs. The final prompt was “prairie grasses and flowers in foreground; old single silo in background.” Even with this, a single silo was rare. The result I ended up using was pretty, but it was another exercise in frustration.

Goose Chase

Now we’re into the recent pile where I still have prompts and failures. For the Unsustainable Goose Chases post, I used the prompt: “man wearing solar panels and wind turbine chasing a goose wings flapping”

Happy-looking guy toting a solar panel and wind turbines in the background (one with a tower made of the same material as Wonder Woman’s plane; blade arrangements may vary). The relationship is wrong between the goose (one of many!) and the person.

A grittier fellow has a giant goose bearing down on him (again, wrong relationship), while some mutant beasts flap in the background. The turbines have their own problems on the mutation front. The “flowers” at lower right look suspiciously like goose wings.

This cheerful go-getter is giving a piggy-back ride to an enormous goose, while avoiding trampling pigeon-sized Canada Geese. What’s up with his arm moving through the panel?

I ended up choosing the only one with a goose out front, who indeed looks unhappy about the situation. Sure enough, the guy is wearing a solar panel, so hats’ off for that small miracle! The wind turbine is designed by Schrödinger: both in the foreground and background at the same time. Or maybe Escher.

Let’s Make a Deal

Okay, I’m sure you’ve gotten the point long before now, but let’s go through one last instructive example. In the Let’s Make a Deal post, I ask questions like: would you trade X for all the animals in the world? So, I pictured a balance-beam scale with animals on one side and a smart phone on the other. Simple idea. Simple weighing of choices.

My first prompt: “balance beam with animals on one side and smart phone on other side” Got it? A kid would understand, right?

Cute animals all down the line. The scale helpfully sits on top of the phone., and is of a construction that leaves it with no way to tip/tilt.

Woah! This is fun! Animals: do whatever you want! We’ll give you a phone to play with, and people (who are indeed animals: well played, there) tromping around below. The scale has a heart! Not all strings make it to the platforms.

These animals are a bit grumpier, maybe because someone cut a heart. The smart phone isn’t in the balance.

Similar to the first: the immobilized scale sits atop the phone, with animals all down the line.

Zero for four. So let’s try being more specific: “balance beam with animals on left side and smart phone on right side” Left and right. Got it? We gotta keep ’em separated.

Oh dear. Get yourselves organized, down there! The phone—refusing to pick sides in a polarized world—says 0FC, which sounds pretty cold.

Another immobile scale fails to pick up the smart phone, or all the animals wandering about. I like the scientist bird.

An improvement. But the AI must have been asking “my right or your right?” No clean separation.

Okay: a workable scale (helpfully labeled along the top), smart phone on the right, but mixed together with animals. A few extra phones just in case (screens are always on, notice).

We clearly need better prompting. How about: “balance beam with animals on left side and smart phone on right side no animals on right side” Maybe this will finally separate them.

Ugh. No smart phones on the scale at all. Full symmetry. I like the extra baskets below the scale (I’d pay extra for that), and the purse in mid-air.

Apparatus is unclear: scale or balance? This time at least the smart phone is on the right, but the instruction of no animals on the right side was clearly ignored. Maybe the animals are the problem, unable or unwilling to follow directions.

Brilliant! Why didn’t I think of that? Maybe because I’m not a moron.

A happy crew that again fails to establish the key relationships.

I gave up, and decided to try a spin-off on the game show, after failing to find free-use images. The prompt was: “Let’s Make a Deal” game show contestents. Yes, my misspelling was in the prompt. All the same, each of the four images produced was passable, and similarly wacky. I picked this one:

I gawk at the size disparities among the people (don’t look too closely at the disturbing finger), and also the fantastic numbers. They remind me of threeve. The one on the left has two dollar signs and a bold leading zero. The middle one cleverly recognizes that commas (this one fork-tongued) sometimes appear randomly in numbers without any rules. The one on the right is my favorite, though. I like the leading five-ish number, the efficient combination one-comma, and the four-Delta. The show title got cut off on the right edge, so we can’t rule out the return of DEAP! Somehow I’m able to just accept the garish avian-themed set.

Lessons

Why did I belabor these trials with AI? Perhaps I only expose how inept I am at speaking AI-prompt. I would appreciate pointers that would remedy the wasted time and resources, only to produce mostly garbage (like how to group words to force correct relationships). As a result of the failed trials, I feel appropriately guilty for the associated energy expenditures.

I show all these examples so that you can live a similar experience and see how silly AI often is. Not to sell it too short: I am truly impressed that a visual representation can be created so quickly that would take me weeks to produce—if I even had the skill to do so at all. But it can’t tell stories.

AI is good at placing objects in an image, dressing them up in funky costumes and rendering in various artistic styles. Just don’t try to specify how/where the elements are to be arranged. Images can also be accidentally evocative—but I suspect only because the training set had evocative imagery.

What it consistently misses is relationships between elements—crucial for a story. It works in the realm of objects and detail, not in synthesis of a larger whole. Nouns and adjectives, but not verbs. If you’ve read Iain McGilchrist’s The Master and His Emissary, you’ll easily identify AI as an algorithmic left-hemisphere processor, lacking the qualities of the right hemisphere for understanding metaphor, narrative, relationships, and the whole. We need to be better than that.

Views: 3093

The last paragraph opens so many questions. Can a machine that processes masses of information using 1s and 0s ever have anything but an algorithmic perspective? The architecture defines the abilities here. What is the fundamental information processing architecture of the human brain? I doubt it reduces to 1s and 0s.

Also unsaid in this essay is the accelerating growth in energy demand for AI. Like your previous essay on the efficiency of living bodies compared to machines, I’m beginning to wonder if artificial knowledge is equally inefficient. There must be a theoretical limit to intelligence processing.

I found and read this book: "Atlas of AI Power, Politics, and the Planetary Costs of Artificial Intelligence by KATE CRAWFORD" it contain some references to papers trying to gauge IT energy cost.

Neural nets don't have the laws of physics governing them, so they often ignore them while creating whatever the end result may be. Ever had a weird dream where things morph and change or simply don't make sense in their construction, layout, or response to input?

Ai is going to be prone to the same limitations that us humans experience in our dreams. Our dreams are usually close to physical reality but have that one quirk that makes it obvious that the experience is a dream and not reality.

Those examples are hilarious (and sometimes disturbing, like the 'zombies' paddling out of the ocean lol). Looking at all those chainsaw failures, I couldn't help thinking that you'd have saved yourself a lot of time and effort by actually climbing a tree, wielding a chainsaw and getting someone to take a photo…

Artificial 'intelligence' has no concept of relationships, or of anything else. It can have no understanding because it has no feeling, no sentience… again, words fail, when it comes to describing something humans (and other animals) take so much for granted – i.e., consciousness.

Can a machine ever be aware? Consciousness does appear to be unique to biology. You could run an AI training program for millions of years, giving it all the libraries and computer networks you like – the end product, however complex, would still just be a computer program. A list of symbols, however long, does not constitute awareness.

Evolved processors (e.g., brains but even simpler arrangements) cannot afford to be unaware of its contextual surroundings. Such systems simply fail to navigate and survive the world. This means an intuitive grasp of relationships. Even an amoeba may be said to be aware, and processing reactions to the environment it finds itself in. The awareness is baked into how this collection of atoms is arranged, according to instructions that are the way they are because they work. Consciousness/awareness shows up in biology because that's the domain on which evolution operates. Give it billions of years and amazing degrees of awareness may emerge. No magic spark outside physics: just what happens to work.

AI has never been subjected to such unforgiving selection pressure, and is akin to a mutant that can't do the first thing to ensure its own survival (except as a parasite on human brains, apparently).

I agree, biology is a subset of physics. Exactly how consciousness arises is unclear, but no magic is involved, only physics.

Is it just a case of emergence from complexity? Maybe some as yet undiscovered physics is needed? (Perhaps consciousness exploits the 'gap' between general relativity and quantum theory?)

It's tricky, trying to study/understand something which is the very thing that enables understanding – in other words, the experimenter can't be 100% objective, due to it being impossible to remove oneself from the experience of consciousness.

Regarding its not having been subjected to selection pressure, IMHO no amount of evolution can ever produce consciousness in AI, for the reasons previously mentioned.

The only consciousness a computer might possess would be due to its mineral content, not its (machine) configuration. That is, a rock of similar weight and chemical composition would have a similar (tiny) amount of consciousness.

I think you're on the right track, here: nothing exotic is needed to provide ample room for emergent complexity—to a sensational degree (in that such mechanisms provide sensations). No gap needs minding, either in physics or biology—in that consciousness is a continuous matter of degree from the amoeba's simple awareness (sensation) of its environment to what we and other more complex lifeforms experience. It's truly fantastic, and I love it, but we're certainly not smart enough to elicit a gap or a reason that vanilla physics is incapable of expressing the degree of complexity (and amazingness) we experience. The "tricky" bit is some peoples' disappointment that our sophisticated sensations could have such simple roots. We want it to be more special/magic, but that's a non-objective error we bring to the table, in my opinion.

This is a good illustration of the saying "a picture is worth a thousand words".

When we make a picture ourselves we basically describe what we want the picture to look like very precisely way to our body and it makes the picture exactly how we want it to look. Because it is pretty much impossible to describe exactly what we want the picture to look like to an AI picture machine the result is always going to be not quite what we had in mind depending on our flexibility.

Long time reader, first time (I think) commenter. I’m not going to tell you you shouldn’t have fun making so images. But I will say that this tech has filled me with a sense of dread for the future like I have t had in a long time. I won’t go into too much of a spiel but you have to realize this tech has really created a lot of anguish in the art world. It’s easy to look at these goofy images and think that artists have nothing to fear and you might be completely right. But I still find it appalling- these Silicon Valley ghouls built an incredibly expensive computer in order to replace humans at something *people enjoy doing*. I have this dreadful feeling that in the future, no kid will ever pick up a box of markers because making drawings just won’t be something that humans do any more. This honestly makes me *hopeful* for the collapse you’ve written about in other places, because it’s clear if we get our Star Trek future our technocratic corporate overlords can’t be trusted to build a future people will actually want to live in.

Oops I meant for this to be an independent comment, not a reply to George.