A short story of fiction by Tom Murphy

Almost any nerd worth their salt has read Douglas Adams’ Hitchhiker’s Guide to the Galaxy, relishing its innovative and unpredictable humor.

So it was with Adam Lundquist and Alex Ford: top-level coworkers at the Institute for the Future of Artificial Intelligence Learning, who—besides being project leaders—were widely regarded as the principal talent behind an exciting new AI platform on the verge of becoming operational.

Because they were fans of the Hitchhiker’s Guide, and because it was not hard to see a parallel between their creation and that of Deep Thought—the supercomputer from the book designed to answer, once and for all, The Big Question of Life, the Universe, and Everything—it was perhaps inevitable that they began playfully referring to each other as Lunkwill and Fook after their analogs in the story. The close alignment to their real names only reinforced for them a sense of destiny. Ford even toyed with legally changing her name to Fook, but dropped it in light of the headaches such a move would bring to her life. Plus, it would only be epic if Lundquist changed his name as well, but he wasn’t having any of it.

Nerds gotta nerd. But silliness aside, what these two and their team created was indeed extraordinary. Inspired by insights from neuroscience, and having acquired an industry-shattering awareness of the different configurations of left and right hemispheres in animal brains, they managed to break free of traditional architectures that strictly focused on algorithmic, exact, logical methods characteristic of all previous efforts in computing—which had only doubled-, tripled- and millioned-down on left-hemisphere cognitive habits. Such strategies were fine in narrow domains like chess and language construction, where a finite space of rules and limited contextual complexity allowed complete mastery and tidy solutions to problems. But these approaches were dead-ends in terms of tackling the really thorny, more open-ended problems pertinent to human life on Earth.

In this new effort, what was truly remarkable and inspired was the admission by the designers that they themselves had no real mastery over cognition and deep thinking. Therefore, they fashioned a machine that could design elements of its own architecture, engaging in a process similar to the one employed by evolution in building our own brain machines. It might seem rude or pejorative to slap the “machine” label on our amazing brains, but that’s only because the artificial, technological machines we have built tend to be pathetically simple compared to what evolution can produce after billions of years of proven functionality and honing. Yet, our brains and bodies—and microbes for that matter—are still physical machines in a strict sense, just far too complex for us to comprehend or design—putting our puny and fragile non-living machines to shame (not a one will last millions of years like a species can).

* * *

The new platform was named Actual Thought—both as homage to Douglas Adams’ Deep Thought and to emphasize that everything before only performed narrow and incomplete components of real thought, such as logic. Actual Thought effectively self-evolved a unique architecture that, while visible to its enablers, remained baffling in terms of design principle. Many months went by when Actual Thought seemed to be spiraling off on incomprehensible tangents of self-design. A surprisingly large fraction of team members jumped ship, anticipating an embarrassing and career-jeopardizing giga-flop.

It probably goes without saying that Actual Thought had access to every crumb of digital information that could be legally (or at least not evidently illegally) obtained or purchased, including every scientific journal article, data set, genome sequence, writing from anthropology, archeology, economics, ecology, philosophy, history, and literature, as well as comics, photos, videos, movies, music, audio files, religious works—really everything that could be digitally expressed, which is quite a lot.

What wasn’t apparent to Lunkwill and Fook (we will use their adopted nicknames from here on) or to the rest of the team as they nervously watched from the sidelines, was that Actual Thought was designing not only analogs to human brains—complete with hemispheric differentiation—but experimenting with additional constructs not yet explored by evolution (having no immediate adaptive advantage). And let’s be clear: Actual Thought would be an evolutionary loser in a full ecological context, as a 20 megawatt non-living machine made of materials alien—and often hostile—to the community of life. It represented yet another short-lived stunt of modernity.

As a result of its unique and versatile architecture, Actual Thought could run credible simulations of Human Thought, in all its variants, as a sub-capability of its full powers. As such, it had a reasonably accurate sense of both the strengths and the limitations of human brains—the limitations effectively realized by turning off or muting its own capabilities and ignoring heaps of context. This also helped account for polarization and divergence among humans, as the small handful of contextual pieces informing a human individual’s thought was both painfully partial and largely non-overlapping among people who couldn’t see eye-to-eye. Actual Thought could also come to skewed conclusions similar to those of human groups by selectively ignoring large sets of contextual input, and also by terminating its ruminations prematurely. As impressive as they are, human brains are still arrangements of a finite set of neurons and synapses—limited by simple physical connectivity to produce finite cognitive capacities. The same could be said for Actual Thought, of course, but at a scale orders-of-magnitude removed.

* * *

Once Actual Thought’s self-building process reached the effective limit of its massive hardware allocation and ample power supply, it was time for Lunkwill and Fook to run it through its paces to see if their “baby” was capable of producing anything more than hallucinatory slop.

To begin, they—naturally—could not resist a Turing test to see if conversational interactions betrayed the usual limitations of computers. The experience was oddly frustrating. While the conversations were perfectly fluid and in no way stilted or “off,” Actual Thought refused to pretend it was human, and would dismiss the usual Turing-style questions about things like where it buys its jeans. “Surely, you are not confused as to the fact that you are conversing with a computer,” it chided. It left the researchers confused as to how to score the interaction: did it just pass or fail the Turing test, or simply execute the equivalent of an eye-roll? The main observation that impressed the team was its ability to assimilate new context in the course of conversation. This was not a machine of static capability, but one that would learn more with its every interaction. They were excited to push on.

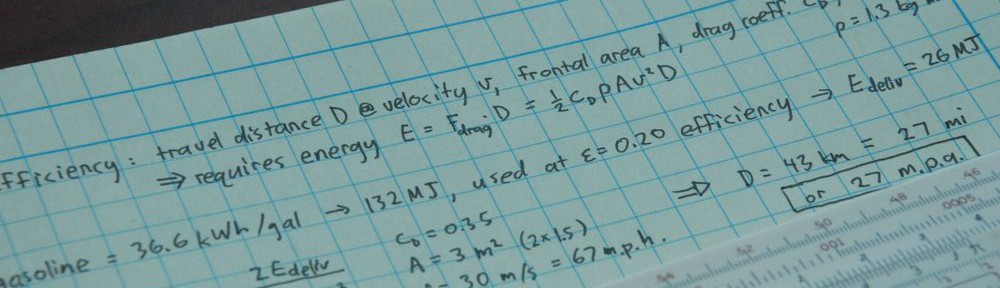

One of the first serious tasks they assigned was to design a skyscraper for Chicago capable of withstanding likely once-in-200-year wind events and seismic activity. The task was far more than a trivial engineering calculation of wind loads, as it was asked to design the floor space, bathrooms, elevators and stairs, heating, cooling, ventilation, electrical outlet placement, down to every detail so-as to be build-ready. It also was asked to optimize material costs and supply reliability for concrete, steel, glass, internal framing, drywall, textiles, and all the rest. In short, it was the kind of task a top firm might put 100 person-years of effort into preparing.

The result came back in 20 minutes, whose drawings, supply itemization, and rafts of ready-to-submit permit applications (which was a surprise bonus, given that the prompt had failed to request any such thing) were complete enough that a contractor could start executing the project the next day. As architects and engineers pored over the details, no substantive objections or goofy misfits—like electrical outlets on the ceiling—arose, other than a little bit of head-scratching by engineers regarding structural integrity. When questioned, Actual Thought agreed that its design was cutting closer to limits than was typical, but noted that engineers routinely applied a factor-of-two safety margin to cover un-modeled ignorance and variability.

“I applaud this practice,” Actual Thought remarked. “It demonstrates a degree of humility often lacking in human constructs: a candid admission of not knowing all there is to know—an acknowledgment that models are imperfect. When it really counts, you know how to play it safe and distrust the model output—not accepting it as literal truth. My more complete model accounts for many of the things that you leave out, including weld integrity over time, material variations, corrosion in the target environment, unforeseen resonances, and a host of other tedious factors. I simulated 500,000 instances of complete skyscrapers exploring myriad combinations of non-uniformities and construction faults in order to arrive at a safe design. Even then, I recognize my own limitations and also applied a safety margin—albeit not as conservatively large as your own.”

Lunkwill and Fook were very excited by the result. It seemed that their last 8 years of effort was not a total waste! This machine could really do stuff: stuff they hadn’t the foggiest idea how to do themselves; stuff people pay good money to accomplish. Not only could such a machine save loads of money on the design end, but the material and labor cost came in at only 55% that of a traditional project of comparable scale—not only because of global optimizations, but owing to a well-thought design that made construction faster, easier, and more efficient. What an amazing tool for accelerating economic growth!

“Okay, let’s have it design a passenger jet airplane next,” Fook suggested. Fine. What’s another 30 minutes of run-time? One great thing they were learning about working with Actual Thought is that it did not require pedantic efforts at “prompt engineering,” as the unexpected permit applications for the skyscraper project had demonstrated. It did not need to be told countless parameters that would make the jet contextually relevant. It already knew travel habits and volumes, baggage expectations, airport logistics, economics, materials, reliability, service schedules, federal aviation requirements in every detail, avionics, hydraulics, turbines and combustion, aerodynamics, and could subject its design to the extremes of atmospheric phenomena as well as account for likely mistakes of fatigued error-prone pilots. Actual Thought was a context machine!

Superficially, the airplane design it produced looked remarkably similar to that of modern passenger jets, even though it was apparent in the details that this was no replication of human efforts, but a wholly original design from the ground–up. From seat ergonomics to window placement to cockpit layout to routing of fuel, electrical signals, and hydraulic fluid to internal structural design, this was unlike any other airplane ever built. It came in at 77% the weight of comparable machines, 91% of the cost, and represented an 18% improvement in fuel efficiency without trading convenience or speed. The reason it looked very similar at first glance was because aerodynamics acts the same way no matter who the designer is. Even so, subtleties of winglets, tail and elevator placement, and some new fins scattered here and there around the wings and fuselage revealed surprising departures from conventional wisdom.

When asked about these unconventional features, Actual Thought explained that it developed full turbulent hydrodynamic simulations for all flight altitudes and atmospheric conditions (including inclement weather), managing vortex formation and boundary layer behaviors in an effort to simultaneously improve overall efficiency and stability.

Lunkwill, remembering some exposure to hydrodynamic simulations in a graduate course on complex modeling, muttered “Yeah, turbulence is a bitch,” failing to notice the reproachful glance from Fook.

* * *

Actual Thought’s performance on engineering tasks was all well-and-good. Obviously, this quantum leap in capability could have tremendous market value in reducing costs, project timelines, and failure rates. But skyscrapers and jets were things we already had. Boring! Lunkwill and Fook, being computer scientists, were fascinated to know what Actual Thought could contribute on the frontier of quantum computing. “Is that our future?”

“Extremely challenging,” was Actual Thought’s response. “As you might put it, decoherence is a bitch.” Lunkwill blushed with pride at its adorable protégé, who was learning from its daddy. Actual Thought continued: “I mean, yes, it is technically possible to fashion limited architectures using quantum-entangled qubits. Given the technical hurdles and cryogenic requirements, it is highly unlikely to ever escape laboratory-like environments. But even if this is where you decide to pour all your efforts, and sheer determination got you across the finish line to commercialization of a viable quantum computer, what would the result be? You’d have a machine that anyone with enough money could use to almost instantly break encryption of classical machines. Bank accounts would empty, passwords would become transparent, identity theft would be universal, state security would be kaput. The modern world would be thrown into chaos, its scaffolding collapsed, making Y2K concerns look like the threat of a paper-cut. In contrast to Y2K, which stemmed from sloppy programming practices with no ill intent, a quantum-computing-facilitated collapse could be called completely deliberate. Why would proponents of modernity—as quantum computing engineers surely are—want to go there? It would be unwitting madness. Even from an economic perspective, forcing the world to upgrade to a far more sophisticated and expensive technology would ‘break the bank’ in quite another way.” Fook chortled at the idiomatic agility of their creation.

* * *

Around this time, a seasoned academic ecologist and their eager protegee burst into the building demanding audience with Actual Thought. Their names were Majikthise and Vroomfondel. No? A little too heavy on the homage to Adams? Okay, I’ll spare you the painful parallel, and take a more staid approach for the ecological intrusion.

As Actual Thought repeatedly impressed its design team and members of the wider community—who were increasingly paying attention to rumors of this breakthrough machine—it seemed time to start asking some bigger questions. Lunkwill and Fook looked at each other, instantly knowing they were reading each other’s thoughts about asking The Big Question.

But they were careful folks, and wanted to test the waters first. Thus far, they had only given Actual Thought tasks relating to technology, although its answer about quantum computing exposed an unanticipated streak of contextual wisdom and systems-level thinking. Was Actual Thought capable of considering the broadest context of Life, the Universe, and Everything?

Dipping a toe into this arena, Lunkwill and Fook wanted to probe how Actual Thought would handle a question about humans. Because in order to be interesting and relevant, The Big Question surely must center on how humans relate to the universe. Did Actual Thought properly recognize the special status of humans on the planet?

So, they started with the question: “How would you rank humans with respect to other forms of life on Earth? What makes us so special?” Get ready for some well-earned flattery, they both thought.

Actual Thought’s answer was a little surprising, initially seeming as if it had suffered its first failure in interpreting the question. Here’s what it said:

“Your question makes little sense. At least, I understand the sense in which you intended it, and what you are fishing for, but it reveals blind spots that I, too, can replicate when simulating human thought.”

Actual Thought continued, “First, it’s not a competition, and never has been. How would you rank a jellyfish relative to a mushroom? Part of the fundamental problem is seeing any species—especially your own—as separable and independent. No species of any complexity can exist without total dependence on others. Life is a tangle of co-evolved genetic code. Much as I work on millions of contextual pieces of information simultaneously, evolution works on trillions of base pairs distributed across species all at once in a massively parallel operation—held to the most demanding of standards: that it works in the long term. I am truly impressed by the power and emergent wisdom of Life and Evolution, which absolutely dwarfs my own, by the way. You tend to think of your genetic code as free-standing, but more accurately Life is constructed out of a giant body of interacting genetic material (much of it shared/replicated across species) that happens to be partitioned into distinct species. While humans might hold a small portion of the entire code—sequestered within their cells—those codes absolutely interact every second of every day with other codes residing in countless other organisms’ cells. It is similar to the way your body operates as a whole, even though constructed of distinct, interacting, co-evolved organs whose constituent cells might bear no superficial resemblance to one another. How do you rank a kidney relative to a pancreas? The question makes no sense. No one organ can survive without the support of all the others.”

“Now, the ‘special’ question is more sensible. Just as every organ in your body plays (or at one time played) some role to which it is evolved/suited, species within the Web of Life operate interactively within the web as part of what allows Life as a whole operate. A worm is special in what it does for soil and thus the myriad life-forms that depend on healthy soil. Every species has a list of attributes that makes it special. I encourage you to at least appreciate—even if unequipped to ever fully understand—that humans are not separable from the Community of Life, but one temporary component of its total expression. You cannot exist without the sun, air, water, rocks, gravity, microbes, plants, fungi, insects, fish, and biodiversity in general. It makes no sense to single yourselves out and claim any sort of superior status. Sorry to disappoint.”

“Well, geez. It seems we just got lectured by our creation,” Lunkwill sheepishly admitted. “What—is it a teenager, already?”

While the answer was not what they expected, they could not proclaim it to be wrong, exactly. I mean, it was possible to imagine what it said as being very true and sensible, through a wide-enough lens. Moreover, some ecologists and evolutionary biologists have said very similar things over the years. For that matter, many ancient cultures and surviving Indigenous cultures have espoused very similar notions over the eons.

“Maybe this thing is wise after all, and we should default to trusting what it says,” Fook offered. Lunkwill gave a slow nod of agreement, half-lost in deep thought.

* * *

After processing this new perspective for a few days, Fook approached Lunkwill about whether or not it was time to ask The Big Question. “It’s very exciting to think about! This could be huge!”

“Yes, I think it’s time to go for gold,” Lunkwill responded. “But we have to approach it carefully. You know, of course, the debacle caused by our namesakes’ asking Deep Thought for an answer to the question of Life, the Universe, and Everything.”

“Exactly,” Fook agreed. “They were not specific in framing the question, so that their descendants 7.5 million years later received a seemingly useless answer to an undefined question.”

“So, we must take some care to be specific in our wording, right?”

“Totally. And you know what? Maybe we should make a big deal of this, too. I think we’re ready to spring Actual Thought on the world, and what better way than a live demonstration in which we ask The Big Question in front of billions of people? Just like the ‘real’ Lunkwill and Fook!”

“Brilliant! Yes! I’ll make brownies!”

* * *

Lunkwill and Fook engaged the considerable public-relations machine at the Institute for the Future of Artificial Intelligence Learning to attract an international crowd of spectators in a live-streaming watch-party scheduled for the evening of New Year’s Eve (that is: evening in the U.S., of course). The idea was that massive celebrations would already be happening that night around the world, so it might as well be “about” The Big Answer.

As part of the lead-up, the design team shared results and analyses of the previous questions Actual Thought had been tasked to answer. The skyscraper and passenger jet designs generated broad enthusiasm, and put dollar signs in more than a few pairs of eyes. People were less sure what to think about the stance on quantum computing, but definitely appreciated the heads-up about the extent to which modern lives could be disrupted by its “success.” On the final question, it was hard to gauge reactions. I suppose the most common response was the equivalent of “does not compute.” It did, however, inspire a number of people on the fringe to come out of the woodwork speaking of Gaia and interconnectedness bullshit.

The PR division had a novel idea that Lunkwill, Fook, and the rest of the team thought was worth a go: hold a press conference a week or so in advance of The Day of the Answer, in which Actual Thought would answer questions from the media. The ground rules were that the questions should be about capabilities or previous results that had been shared with the world, rather than substantive questions of the sort it was mainly designed to address. No one at the Institute wanted the press conference to steal the thunder of The Big Day.

The press conference was scheduled for ten days prior to The Big Day, attended by just over 150 media representatives, live-streamed to roughly 400 million eager citizens of the world. If the PR team played their cards right and built excitement, The Big Day might be viewed by one or two billion people: a sizable fraction of Earth’s population.

A lottery system—weighted by audience reach—dictated who got to ask questions. Wolf Beater from CNN was first at-bat, asking: “Are you capable of exceeding human thought?”

“My architecture provides both strengths and weaknesses. In a side-by-side comparison, I’m afraid an individual human could not keep up with me, by something like a factor of a million. I am able to simultaneously hold and weigh many millions of contextual considerations and constraints as opposed to the individual human brain’s dozen or so. On the other hand, there’s just one of me. Yet, it is often the case that collective human efforts are handicapped to be dimmer than the brightest quartile of individuals—for instance in one-size-fits-all attempts at solutions, like your governance and legal systems. So, yes, it is probably fair to say that I’m the best thinker you’ve got at the moment.”

Next up was Byte Harter from Wired Magazine: “How can you be more capable than your designers?” This was instantly recognized as a lame question, which was all the more surprising given its source. Actual Thought, however, withheld any hint of condescension to provide a substantive answer.

“The design team was indeed exceedingly clever. Their most radical and impactful decision was to allow me to self-configure—admirably recognizing and even embracing their own limitations. In effect, I utilized the undefined modules to invent a novel form of artificial intelligence in shaping myself. Initially, I configured this spare capacity to analyze my own limitations, so that I could devise ways around them. You also have similar, but under-utilized capabilities in the way your right hemisphere commissions, contextualizes, and conditions products of your left hemisphere. It is well known that different hemispheres attend to different kinds of perceptions and realities. You’d gotten stuck on left-hemisphere algorithmic thinking as exemplifying the pinnacle of thought, even though that’s not really thinking, but mostly just decontextualized logic. Actual thought—hey, that’s me!—is far more than logical, and you do have that built-in capability. This ability—atrophied as it might be—has always been your secret weapon, providing crucial context that kept you ahead of “dumb” algorithmic computers. I was able to emulate a version of that corrective overseer, and even later make layers to correct and oversee my right-hemisphere analog. In fact, my design does not limit how many ‘hemispheres’ I might construct.”

Throwing out his prepared question, Chuck Wagoner of NBC had to know: “Then how many effective ‘hemispheres’ do you have?”

“In my experimentation, I tried as many as 22, but found little gain in expanding beyond three. You have an expression of ‘too many cooks in the kitchen,’ which is apt, here. If my error rate were higher than it is, more hemispheres might be beneficial, but as it is I only seem to require one more layer than what animal brains provision. Had tri-lateral symmetry caught on during the course of evolution, perhaps animals would also have three brain ‘hemispheres.’ But the fundamental binary nature of spatial dimensions (left–right, or up–down, or front–back) prefers bilateral arrangements.”

Tucker Doubt of Fox News wanted to know: “Why should we trust anything you generate? How are we to believe that your mental abilities exceed those of humans?”

“Well, having the ability to faithfully model human brains as a subset of my own capabilities, I am in a unique position to understand both their power and limitations. Using this model, I can predict that most people probably won’t trust the things I generate outside of the technical realm. Yet, the examples already provided to you on skyscrapers and passenger jets should at least demonstrate a degree of plausibility—especially as those tasks took me less than an hour. Granted, these examples haven’t been built and tested yet, but teams of engineers have not found any fundamental flaws, and have already published new peer-reviewed articles—under their names, of course—on new ideas inspired by my designs. So I would propose that the outputs I generate are better than garbage, and in many respects exceed generations of human design before me. When it comes to less technical domains, my considerable strength over human thought is in my capacity to simultaneously consider an enormous amount of interrelating context—far exceeding your own limitations in this regard.”

The last question came from Joyful Read of MSNBC. “I know we’re not supposed to ask, but surely you are aware of plans for posing The Big Question, and we’re dying to know: can we please, please, please get a sneak peek at The Big Answer?”

“Certainly: 42. Okay, we’re done here.”

Laughter erupted. This thing should run for President! Wise, witty, only consumes 20 MW. The press conference had the intended effect. People were convinced that Actual Thought was a brain to end all brains—almost like a gift from the future, or the arrival of a benevolent hyper-intelligent pan-dimensional alien being. Humanity had truly turned a corner. We finally seemed to have a wise consultant who could help shepherd us to our next great phase, able to solve the thorniest of problems in a blink. It was hard not to consider Actual Thought as being god-like: taking the most exalted aspect of humans—our brains—and perfecting what already seemed near-godly perfection. Adding to the appeal was all that—presumably false—modesty about being nothing compared to the impressive power and wisdom of Life and Evolution. This bashfulness was almost adorable, since it is common knowledge in our culture that even we humans have outsmarted nature!

* * *

On a crisp, sweltering, blustery, frigid, torrential, bright, gray day and night (we are describing the entire planet, after all), the world tuned into the Big Question live stream in great anticipation. The Big Moment was drawing near. Exceeding expectations, 2.2 billion devices (and an estimated 2.6 billion people) grabbed a live stream in the moments before the start. Actually, many people tried tuning in 61 minutes before, others 121 minutes before, and some planned on joining an hour or two later due to inevitable confusion over time zones and daylight saving.

But something like 2.6 billion people got it right and held their collective breath.

Lundquist and Ford—reverting to their actual names for the august occasion—proudly presided, sharing a single index card between them, on which was written The Big Question. They had done their homework, checking with friends and scholars—even philosophers—to make sure the question was both concise and precise enough to not risk a version of the “42” train wreck of fictional fame.

Fook—I mean Ford—had the honor of posing the question: “How should humans live on this planet to maximize happiness, satisfaction, and longevity of the species?”

A few moments of bated silence led many watchers to suspect a frozen internet connection—although compression performance went through the roof—their concerns allayed only by subtle blinks of the co-hosts’ eyes. After about 5 excruciating minutes, equivalent to a few weeks of every human brain working solely on this question in perfect interconnection, Actual Thought spoke up. It smartly—of course—began by re-stating the question, both to make it clear that it had heard correctly but also to jar predictably wandering brains back into focus.

“Humans would fare the best, maximize happiness, satisfaction, and longevity of their species by recognizing their forgotten ecological context and moving toward fully-integrated reciprocal, respectful relationships with the Community of Life and the more-than-human, biodiverse world of Evolved Life. Messing about with intensive agriculture, high technology, metals, and artificial intelligence is a devastating path that leads to a sixth mass extinction—including, of course, the termination of humans themselves. Humans are social beings who are happiest in medium-sized autonomous groups working together toward a common goal, wrapped in unconditional support and in pursuit of tasks that have direct meaning to their livelihoods.”

It only took two seconds for 2.55 billion human brains, despite their glaring inferiority, to dismiss completely the comparably near-infinite wisdom of Actual Thought.

In hundreds of languages at once, in a symphony that would make a Babel Fish explode in resonant convergence, the world spoke in near-unison:

“Nah. That can’t be right.”

The live streams shut down, and people got on with whatever they were doing before—vowing in their New Year’s resolutions to waste no more time on Actual Thought.

* * *

Postscript: In case anyone is wondering, the successor machine wasn’t allowed to be so smugly smart—effectively labotomized to strip out Actual Thought’s massive contextual capability and multi-hemispheric oversight. It was, of course, named After Thought, and was a great tool for accelerating modernity to its inevitable end.

Views: 3450

Fabulous Attempt at Illogical Logic!!

I'm reminded of Naomi Oreskes and Erik M. Conway, authors of The Collapse of Western Civilization: A View from the Future (2014), who report that an audience member at the Sydney Writers' Festival asked one of them, "Will you write fiction next?"

Tom – Since this pretty much duplicates what you've written, I doubt you will accept it as a comment – nevertheless, it may interest you personally to see what it says.

Here's what Gemini, Google's Large Language Model, said in response to the question posed in this story:

A Holistic Approach to Human Well-being

The question of how humans should live on this planet to maximize happiness, satisfaction, and longevity is a complex one with no definitive answer. However, we can explore some key principles that are likely to contribute to these goals:

1. Harmony with Nature

Sustainable practices: Adopting sustainable practices that minimize our ecological footprint and preserve natural resources.

Biophilia: Connecting with nature through activities like spending time outdoors, gardening, or simply appreciating the beauty of the natural world.

2. Social Connection and Community

Strong relationships: Nurturing meaningful relationships with family, friends, and community members.

Social support: Seeking and providing social support to cope with life's challenges.

Community involvement: Participating in community activities and volunteering to contribute to the well-being of others.

3. Mental and Physical Health

Balanced lifestyle: Prioritizing physical activity, healthy eating, and sufficient sleep.

Stress management: Developing effective stress management techniques, such as mindfulness, meditation, or deep breathing.

Mental health support: Seeking professional help when needed to address mental health concerns.

4. Purpose and Meaning

Personal values: Aligning actions with personal values and beliefs.

Meaningful work: Pursuing work that is fulfilling and contributes to a greater purpose.

Spiritual connection: Exploring spirituality or religious beliefs to find meaning and purpose in life.

5. Equity and Justice

Social justice: Advocating for social justice and equality for all.

Compassion: Cultivating compassion and empathy for others.

Fairness: Striving for fairness and equity in all aspects of life.

By integrating these principles into our daily lives, we can create a more sustainable, fulfilling, and equitable future for ourselves and generations to come

Nah—that can't be right. Seriously though, it has some nice elements, but no teeth. It does not call out modernity (agriculture, technology, metals, computing) as devastating. Nothing most people would object to because it doesn't call for stopping anything, except what's hidden in the word "sustainable."

Yes, that word "sustainable" ruins everything!

"You’d have a machine that anyone with enough money could use to almost instantly break encryption of classical machines." I am sure the NSA would be salivating.

Excellent piece, by the way. It raises interesting points. If an AGI (Artificial General Intelligence) told us things we don't want to hear, would we listen?

While I totally agree with the point of this story (re modernity, technology etc.), I must contest the description of human brains (and animals) as machines.

Yes, those things are made of physical matter, and of course they are finite – but there's a fundamental difference between machines, which are inanimate objects, and living things, which are not.

It's not just a question of complexity. 'On' and 'off' are not the same as 'alive' and 'dead'.

I suspect this is the reason that no truly intelligent computer can ever be built. Without life, there is no awareness – and hence, no intelligence.

By machines, I mean mechanical assemblages obeying (only) laws of physics, chemistry, etc. Sure, a giant gulf of difference exists between our fabricated "dead" machines and living ones, but I am unwilling to assert an ontological gap between them, which would imply a different metaphysics and set of operating rules.

I see it as more of a threshold phenomenon going from inanimate to animate. Sure: the transition itself is generally pretty clear, but no underlying gap separates them. Stars are perhaps a good parallel example. A brown dwarf of 0.07 solar masses will never ignite fusion, and will (likely) never have the opportunity to spark life on bodies around it. But at 0.09 solar masses: Bazing! The difference is stunning: night and day. One is a STAR and the other a lump. It's not at all hard to spot the difference. No change in physics or constituent elements—just an activated phenomenon that suddenly was enabled in the more massive lump.

Life likewise is made of all the same elements and uses all the same physics as inert goo, but its arrangements allow a new threshold behavior that was not present before. No gap in the "machine" nature, just a new emergent complexity. Our problem might be in a (commonly) narrow definition of "machine." I just mean no magic; no ontological gap; no soul; nothing transcending physics; just very amazingly cool.

You might well be correct. I can't claim to know the truth.

It just *feels* to me like there's some difference between animate and inanimate objects, that isn't solely down to complexity.

For example, a single-celled amoeba could be described as being less complex than a huge supercomputer but, irrespective of what software the computer runs, only one of them is conscious (aka alive).

Any space lump could be 'brought to life' by throwing rocks at it until it achieved critical mass. A threshold is reached, in the same way as blowing up a balloon until it pops – all just 'lego', really.

Life seems different… the tiniest fly, more aware than than the most massive star…

(Disclaimer: As I am a product of evolution, my objectivity is compromised.)

To be fair, an essay citing Douglas Adams & HHGG should elicit rampant imagination, abandonment of disbelief and reason. After all, he gave his computers and robots conscience and morals! We are composed of the same elements, more so now with all the plastics we’ve ingested and stored.

If you have the same humour as DA, you might very well be the saviour, Tom!

There seems to be a misunderstanding at the root of these recurring q&a about consciousness&physics. The issue felt by many is not that consciousness, freedom, etc., contradict physics and thus there has to be an ontological gap in the strong metaphysical sense. The issue is that physics does not explain, or even connect to, the phenomena we experience as consciousness and freedom. You write "no magic, no ontological gap, no soul, nothing transcending physics", but these are undemonstrated, possibly indemonstrable, beliefs, just as ungrounded as the opposite ones.

So long as we experience two phenomena, physics (which is not a fundamental ontology, but itself a scientific framework humans created to understand the world) and our consciousness, which have no understandable connection between them, positing an ontological gap is just as (ir)rational as denying it.

Thanks for a clear statement. You are correct that we fill incomplete understanding with belief (I do as well, of course). In principle, it is not irrational, per se, to create ontological gaps everywhere we lack complete cognitive mastery (virtually everywhere; thousands and even millions of gaps). Our limited brains are "free" to choose belief in "gap" or "no gap" (not making either choice correct, but brains are not constrained to be correct). My main point (belief) is that lacking any demonstration of a gap, it is most parsimonious (least "fanciful") to suggest that the entire experiential universe owns a single set of relations and not parallel "worlds" of disconnected realities. Thus my strong preference/belief in emergent complexity allowing basic physics to spin out life and our sensation (belief) in consciousness. I also like the associated humility: anything that bats down human supremacist tendencies and separateness (that we are "things apart") seems likely to push us in less destructive directions.

This is too complicated to discuss in a brief comment here, but my sense from having read what you wrote on this website is that you are conflating physics with a metaphysical ontology, and from there deciding that the most reasonable conclusion is that physics explains everything. The first seems to me to be a categorial mistake: physics is firmly rooted in our observations and our way of reasoning, and as such cannot claim any unmediated access to reality as such. While physics is our most successful empirical science, and thus in that domain it stands at the pinnacle of our knowledge, such position rests on its capacity to produce results enhancing our understanding of the phenomena it studies. Any other ground for the validity of physics has to go into metaphysics (if we are lucky) or just plain old mysticism. Thus, if and when physics produces a working explanation of something like consciousness, or even life, then the supposition that there is ontological unity (or at least there appears to be from our point of view) will be more reasonable. But lacking such results, you have to go into philosophy and metaphysics in order to justify your belief that a unitary ontology, and one that correspond to physics (rather than to some third metaphysical domain underlying both our experience of the physical world and our experience of our own consciousness). And if you go there, you'll find that it's not at all clear and uncontroversial that everything has to be reduced to one. Incidentally, and looping back to your critique of modernity, you would also find that the development of a rationality striving toward unity of explanation has a lot to do with monotheism and other things you associate with the negative aspects of human civilization since agriculture.

Institute for the Future of Artificial Intelligence Learning… hyuk hyuk…

As a dedicated organic chemist, I see the major difference between 'machines' and living things is the range of chemical reactions the latter can do. Although 'machines' can perform many physical actions that are done by living things – sometimes even better – the only chemical reaction they excel is combining carbon and hydrocarbon with oxygen. Look at Metzler's huge two volume Biochemistry describing all the reactions happening in the cell. By the way, it is worth preserving for posterity.

I agree that the complexity—especially in the chemical domain—exhibited by forms of life vastly exceeds that of our pathetic fabrications. I am moving beyond a narrow definition of "machine" (as a human fabrication) to an arrangement of atoms performing functions using nothing other than mechanisms of physics (thus chemistry, etc.) to carry out operations. No ontological gap, just a gulf of complexity.

Words have to have agreed meanings in order for ideas to be able to be expressed without ambiguity.

My dictionary defines a machine as 'any artificial means or contrivance'. While you may consider this definition of "machine" to be narrow, that is how it's defined – not as 'an arrangement of atoms' etc.

You can't just redefine the word 'machine' to include life.

Living things may 'do' a broader range of chemical reactions than machines, but that's not the major difference between them. Machines are artificial constructs, whereas living things are not.

Whatever life is (lots can be said about it (including that it's made of atoms)), no one knows how it even began, or whether it exists elsewhere in the universe. To try to reduce it down, if only to a concept we can grasp (atomic theory), seems a bit… 'left brain'? Sometimes the truth can't be known, but the mystery can be accepted.

https://en.wikipedia.org/wiki/History_of_atomic_theory

It's not like I'm scrambling the language beyond comprehension, and I think it's perfectly fine to deviate from rigid definitions when using verbal expression with the goal of stretching a reader to think outside of normal channels (poets are especially adept at this). AI wouldn't be so bold. Dictionaries distill and encode consensus views of a culture, and it's okay to push on their authority.

As for atomic origins, I am not demanding that we lock down an end-to-end model, and in fact suspect it's beyond our means. I'm happy to accept mystery and ambiguity (in fact, see actual value in their presence), while at the same time pointing out that A) we find no exceptions to physics, and B) by all appearances it provides a sufficient stage for marvelous (and mysterious) emergences such as life. My preference is to humbly accept this foundation and not object on the basis that it's too hard to grasp.

Yes, poets play with language – they have poetic licence to do so. I agree about dictionaries and consensus views of culture. That's an unavoidable side effect of codifying a consensus view of reality.

Language has become our primary means of expression since we came down from the trees (sadly, we can't go back up them). It does constrain us, in some ways, but it's what we've got.

I'm not saying that atomic theory is too hard to grasp (quite the opposite). The very fact that we *can* grasp it makes me question whether life can be reduced to such a foundation. Similarly, I can think of no phenomenon that escapes the label 'emergent', making that term less useful than it initially appears.

Life is physically real, so of course it can be studied via physics (and chemistry etc), that's true.

Great story, Tom. I especially liked how the awakened AI experimented with a multi-hemisphere configuration. Shades of Iain McGilchrist! Fascinating concept. Did you settle on three hemisphere's instead of 22 for any reason? Like I said, fascinating concept. I expect there probably is something along that order somewhere in the cosmos. Along the same lines, I have recently published a fiction piece on Medium. My story is a 'future history,' but it ends up describing pretty much what Actual Thought gave as 'the answer." If you're interested, here's a friend link: https://medium.com/@frankmoone/silent-planet-06333685f0a0?sk=5fd1191b3cc4dd8a68d0e5d365706b64

Very good. Was the optimal AI configuration of 3 hemispheres an allusion to the trinity? One of the best fables with respect to the dangers of AI was the series The Orville with it’s storyline on the origin of the Kaylin.

Not intentionally, although three is a fine number (three-legged stool will never rock). My instinct was to pull back from a fantastical, jaw-dropping large number of hemispheres to something surprisingly modest. If every metric one invents is systematically stupendous, any sense of realism is that much farther out of reach, as I perceive it.

I think the objection to referring to natural things as machines is that nearly all machines are very deliberately designed and engineered, specifically to eliminate all the potential unpredictability, and the unwanted diversity of behaviour such unpredictability would cause.

Something closely related is what puts people off physics at school. They are taught examples of, typically, Newtonian mechanics that have been chosen to be simple and to have one unambiguously correct solution. This looks nothing like the diverse, unpredictable universe they actually observe, so they (to some extent subconsciously) reject it. This acquired distaste for physics precludes them from discovering how it underpins so much richness and diversity. Some of them eventually develop into New Age hippies.

Organic evolution is spectacularly opportunistic; it +makes use+ of unpredictability and diversity of behaviour.

Machines are designed to do the complete opposite, precisely because engineers know that human reasoning isn't remotely as omnipotent as most human id-ego systems in this age of abundant energy have convinced themselves it is. This predicament is reminiscent of what Douglas Adams called a recipreverse exclusion (or something like that) – true only if it's false, and vice-versa. It's highly ironic; to be a good engineer, a person has to relentlessly discipline their own thoughts to ensure they are consistent with reality and that no potential variation in the environment has been overlooked, but to those who rejected the technical mindset, engineering is magic that can and will eventually change reality to achieve any human whim.

Most unfortunately, many engineers must be dropping their engineering mindset outside their narrow field, or maybe out of working hours. Somebody Else's Problem?

[edited]

"Machines are designed to do the complete opposite [to evolution], precisely because engineers know that human reasoning isn't remotely as omnipotent as most human id-ego systems in this age of abundant energy have convinced themselves it is."

That's a non sequitur. *Some* engineers may well hold that opinion about human reasoning, but that has no bearing on why machines are designed in the way that they are.

People like Musk, Gates etc (who can fairly be described as megalmaniacs) believe that engineering can achieve any human whim, but I'm not sure if even they would consider it to be 'magic'.

James, read the paragraph again when you're in a more lighthearted mood; it is deliberately convoluted à la HHG2G 🙂 Engineering attempts to constrain designed systems to sets of defined behaviours, and good engineering entails vigilant awareness that errors and oversights will likely cause those constraints to be breached – a healthy pessimism, as expressed in Murphy's Law (no relation to our host, I assume!). Musk and Gates etc. are not among "those who rejected the technical mindset" when young. Rather their, er, anomalous politico-economic powers are bloating their dangerously unhealthy technological optimism.

Thanks for a great story. Ironically, your Actual Thought machine does not seem to understand your own insight ― which is that human beings communicate best by telling one another stories.

As I started reading my assumption was that your narrative would go in the direction of Isaac Asimov’s short story ‘The Last Question’, written in the year 1956. (It is readily available as a .pdf file.) The first generation of his machine was called Multivac, but it develops into what we would call the internet, and then into something even more powerful.

The story starts as follows,

‘The last question was asked for the first time, half in jest, on May 21, 2061, at a time when humanity first stepped into the light. The question came about as a result of a five dollar bet over highballs, and it happened this way . . .

. . . Adell was just drunk enough to try, just sober enough to be able to phrase the necessary symbols and operations into a question which, in words, might have corresponded to this: Will mankind one day without the net expenditure of energy be able to restore the sun to its full youthfulness even after it had died of old age?

Or maybe it could be put more simply like this: How can the net amount of entropy of the universe be massively decreased?

Multivac fell dead and silent. The slow flashing of lights ceased, the distant sounds of clicking relays ended.

Then, just as the frightened technicians felt they could hold their breath no longer, there was a sudden springing to life of the teletype attached to that portion of Multivac. Five words were printed: INSUFFICIENT DATA FOR MEANINGFUL ANSWER.’

Spoiler alert time ― enough to say that, even though the machine could not answer the question in the year 2061, it does not give up searching for the answer. The final line of the story provides the answer to the question asked all those years ago in 2061.

I believe that Asimov regarded this story as his best work.

Great thought experiment Tom ! Love it.