As technology has invaded many facets of our lives, I am guessing that all of us experienced the frustration of trying to reason with algorithmic minds. Have you ever raised your voice to a device, asking “Really!?” We might wonder if the application was designed by an unpaid intern, or if the designer ever tried to use it in realistic circumstances. But no amount of frustration will have an effect. The operating space is prescribed and rigid, so that no matter how many times we try, the thing will stubbornly execute the same boneheaded behavior.

Imagine that a self-driving car in a city detects a voluminous plastic bag in its way. It will stop, and say—perhaps silently to itself—”Object.” “Object.” “Object.” “Object.” “Object.” “Object.” I could go all day. No, actually I can’t. But it can, and that’s the point. A really sophisticated version might say: “Bag.” “Bag.” “Bag.” “Bag.” Meanwhile, a human driver might look at the bag, and based on the way it waves in the breeze decide that it’s mostly empty, but just looks big, and is safe to drive over without even slowing down—thus avoiding interminable honks from behind.

Arguing with robots would likely be similarly tedious. No matter what insults you are compelled to fling after reaching your frustration threshold, all you get back is the annoyingly repetitive insult: “Meat bag.” “Meat bag.” “Meat bag.”

Meat bag brains have the advantage of being able to take in broader considerations and weave in context from lived experience. We can decide when algorithmic thinking is useful, and when it has limits. Unfortunately, I buy the argument from Iain McGilchrist that modern culture has increasingly programmed people to be more algorithmic in their thinking—in my view via educational systems, video games, and ubiquitous digital interfaces. I often feel like I’m arguing with robots, but of the meat variety.

Robotic Traits

Let’s start by sketching out some of the traits I associate with “robotic” thinking.

Algorithmic

Robots (and robotic thinkers) love algorithms. Algorithms are well-defined, produce consistent results, and bring a sense of both order and certainty. They effectively act as short-cuts that allow automation without deep thinking or understanding context along the way.

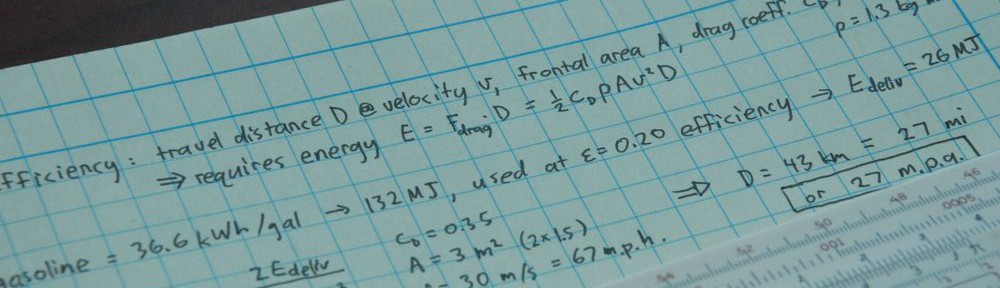

An example from physics would be in computing the time it takes to heat a cup (250 mL) of water from room temperature to boiling using a microwave oven that transfers energy at a rate of 1,000 W—given water’s specific heat capacity of 4.184 J/mL/K. Algorithmically, we could look at units (or a formula) and see that we multiply 4.184 J/mL/K by 250 mL and by the temperature change of 80 K (actually, the robot thinker might get stumped here because room temperature was not spelled out), then divide by 1000 J/s to get an answer of 83.68 seconds.

I myself would think more intuitively and say that the more water I have and the higher I raise the temperature, the more energy it will take. So I would decide that each degree takes about 1000 J to accomplish (roughly 250 of the 4-per-milliliter number—hey; it works out to a kJ, or about a Btu, like the combustion energy in a wooden match—seems okay), and now I need at least 80 of these to go from room temperature to boiling, or about 80 kJ. Since I’m flinging in 1 kJ every second, it will take at least about 80 seconds. Realistically, losses and heat flow out of the water as it warms will make it a bit more, so I’ll say 100 seconds. Obviously the answers are similar, but I scooped up various intuitions and checks along the way. I lived the experience and understood the meaning and context of each step. The result is imprecise, which is absolutely warranted for real-world application, where the inputs are themselves tend to be approximate. Robotic thinkers have an allergic reaction to this seemingly more convoluted approach: “just tell me how to do it.” Their loss.

Rigid and Literal

Robots and robotic thinkers like to operate in fully-defined spaces with clear rules. Our society values those who do this well. Such thinkers excel at games, and enjoy the safe space it creates.

When a model is applied to a real-world scenario, the robot is likely to exhibit misplaced concreteness in the model, taking it literally. If the model says X will happen, then X becomes the reality. It’s reassuring, I suppose—providing a sense of containment or tractability.

Narrow-Boundary

Part of what allows robotic thinkers to advance in a task is whittling down the parameter space until it is manageable: arriving at the well-defined space with clear rules. Inevitably, this leaves out “squishy” aspects that may be important—or even essential—to the problem, but don’t lend themselves to algorithmic casting.

Left-Brain

I am no expert on left–right hemispheric differences, but am intrigued by the work of Iain McGilchrist, whose descriptions map well onto this space. In this parlance, robotic thinking is characteristic of what the left hemisphere excels at doing. I don’t want to sell it short: the left hemisphere offers powerful tools upon which my own career has relied quite heavily. It needs to be consulted in any of these matters.

Student Experiences

I often see robotic thinking in how students relate to math. They squirm upon seeing an equation surrounded by sentences. To me, the equation is a precise sentence that elucidates relationships. It’s overflowing with context and potential application. The surrounding text aims to bring this conceptual richness to life. Once a student absorbs the central idea (the whole point), an equation is simply a symbolic expression encapsulating that understanding. I would say that it’s like representing language (which itself communicates a concept) by a particular arrangement of letters (spelling), but equations are even less rigid, allowing arbitrary choices for the symbols. I can represent Einstein’s famous energy relation by peanut equals nugget times zip-squared—or any other crazy convention—using any zapf dingbat symbols I desire, as long as I identify peanut with energy, nugget with mass, and zip with the speed of light. In fact, I need not have been so parallel in the associations (my substitutes carried some intuitive relation to the original, unnecessarily).

Students squirm because what they want to see—they tell me—is a box containing the math and whatever steps are used to employ the math in an example. Given enough examples, they can map an assigned problem to an example and replicate the algorithmic steps to produce an answer without having to think much or truly understand what’s happening. Intermediate steps are not considered for the insights they might contain. Even the answer is seldom checked against intuition. If a student somehow manages to calculate that it will take 6 million seconds to heat a cup of water in a microwave oven, then that’s their answer. Just like a calculator will always spit out a number but have zero contextual understanding, many students will write down something, with little or no reflection on whether it makes sense.

I’m not blaming students. It’s gotten worse over the years, which must trace to systemic influences rather than an improbable spate of defective individuals. I recently compared midterm exams that I constructed (thus employing the same style/difficulty in creating problems) for the same course in 2004 and 2023—populated by the same general-education audience, 19 years apart. Questions were similarly divided between quantitative (requiring calculation), semi-quantitative (requiring reasoning in numerical contexts), and conceptual (requiring core understanding).

| Attribute | 2004 | 2023 |

|---|---|---|

| Duration | 50 min | 50 min |

| Number of Multiple Choice | 30 | 15 |

| Number of True/False | 10 | 5 |

| Number of Short Answer | 5 | 3 |

| Allowed printed study guide | No | Yes |

| Allowed handwritten notes | No | Yes |

| Fraction turned in at 49 minutes | half | 5% |

| Raw score, average | 72% | 65% |

In 2004, the exam was about twice as long, yet a much higher fraction of students finished before the 50 minute mark. In 2023, I allowed students to bring in a printed study guide, from which I directly created the exam, item by item. They could also write anything on the sheet they wanted. Despite all this, the scores were lower in 2023. Other colleagues who have been at the job for decades report the same phenomenon: having to pare down content, difficulty, and number of problems to track declining student preparedness/capability. If I held the same standard I did in 2004, I would probably be handing out more F and D grades than A and B grades. What do you think would happen to me if I didn’t bend to accommodate changing times? The university administration would notice, by god. We’re all in the same pickle.

Again, I don’t blame the students. I blame the fact that they have been trained to be robots, not thinkers. The market system has worked to make textbooks, lesson plans, and our educational system in general ever more pleasant for the customers, who are always right—by dint of the all-powerful money they hold. It turns out that boxes containing math recipes that anyone can follow go down well, receiving up-votes and becoming ubiquitous by demand. It feeds on itself: without having learned better “street” skills, the students are unprepared to be thrown into a more rigorous pedagogical experience, so the deficiencies perpetuate, resulting in watered-down classes at every level (and increased anxiety among students, who sense their tenuous grasp). My physics elders tend to be noticeably better educated in physics than I am, and I am better educated than present-day physics graduate students. This is not just a matter of accumulated experience: I was in better shape at the same stage, and my elders were in better shape than I was.

Robots in the Real World

My focus here is on students because I have seen the world of higher education up close, and have had the luxury of time to watch the trends develop. But I also encounter similar characteristics “out in the real world.” I have joked with friends that if they ever find themselves being chased by a robot, just throw out some previously-prepared QR codes that will send the robot down a time-consuming rabbit hole (maybe a website evaluating the question “should I chase humans?”). How can they resist?

Human robots likewise are easily distracted by narrow pursuits in which the “world” is reduced to something rather more like a game that has tidy boundaries, rules, and concerns. This is where “solutioneering” and techno-optimism are born. The game is to set aside messy domains that defy easy characterization—like ecology—and keep narrowing until the solution becomes pretty easy and seemingly obvious. Fossil fuels are both finite and creating a CO2 problem? Let’s set aside the broader context of ecological harm due to our energy use, material demands, the thorny question of how energy is used to fuel capitalist inequity, and fossil fuels’ inherent lack of intermittency and ease of producing high temperatures for manufacture. Having brushed those things aside, renewable energy is a fantastic solution. As we peel off the layers of contextual shielding, we first recognize the need to address energy storage. But subsequent layers render such game outcomes essentially worthless, in the end—eventually encompassing domains well past the narrow confines of technology. Yet, it’s psychologically safer, more fun, and more reassuring to stick to the superficial.

If I might be allowed to put this in robot terms, the situation is like a many-input AND logic gate. Success only results if all inputs are satisfied. No contextual “layer” can be ignored. Success and sustainability are synonymous here: we are interested in what can work long term, not things that have failure built in. Therefore, a successful response to our fossil fuel problem would at bare minimum have to address energy/technical parameters (the easy part), while not impeding success on other fronts (the impossible part). What’s tricky in this case is that “solving” an energy substitution game exacerbates material concerns, props up the current power differentials, and ignorantly maintains our current relentless assault on the community of life.

Don’t let the robots (robot-minded humans) do this to us. For any proposal, ask: how does this dial back human overshoot, restore ecological health, make more room for the community of life, starve the beastly elements of our society, and set us on a path for actual long-term sustainability. Most things we hear about fail on all counts. But even failing on one is going to be a problem.

[Note: this post has some overlap with the one on context, partly due to the fact that drafts of both were initiated well before either was published.]

Views: 7025